Publish Spark Streaming System and Application Metrics From AWS EMR to Datadog - Part 2

This post is the second part in the series to get an AWS EMR cluster, running spark streaming application, ready for deploying in the production environment by enabling monitoring.

In the first part in this series we looked at how to enable EMR specific metrics to be published to datadog service. In this post I will show you how to set up your EMR cluster to enable spark check that will publish spark driver, executor and rdd metrics to be graphed on datadog dashboard.

To accomplish this task, we will leverage EMR Bootstrap actions. From the AWS Documentation:

I have created a gist for each of the two steps. The first script is launched by the bootstrap step during EMR launch, and downloads and installs the datadog agent on each node of the cluster. Simple! It then executes the second script as a background process.

Remember ☝that bootstrap actions are run before any application is installed on the EMR nodes. In the first step we installed a new software. The second step requires that YARN and Spark are pre-installed before datadog configuration can be completed.

yarn-site.xml does not exist at the time the datadog agent is installed. Hence we launch a background process to run the spark check setup script. It waits until yarn-site.xml is created, and contains the value for yarn property 'resourcemanager.hostname'. Once found, it proceeds to create the spark.yaml file, and moves it under /etc/dd-agent/conf.d. Then it sets the appropriate permissions on spark.yaml, and restarts the datadog agent. The agent's info subcommand runs the spark check. 🙆

One more way to validate would be to ssh into an EMR instance, and execute

In the output, you should see spark check being run as follows:

Now that we have the datadog agent installed on the driver and executor nodes of the EMR cluster, we have done the groundwork to publish metrics from our application to datadog. In the next part of this series, I will demonstrate how to publish metrics from your application code.

If you have questions or suggestions, please leave a comment below.

To accomplish this task, we will leverage EMR Bootstrap actions. From the AWS Documentation:

This is a two step process.You can use a bootstrap action to install additional software on your cluster. Bootstrap actions are scripts that are run on the cluster nodes when Amazon EMR launches the cluster. They run before Amazon EMR installs specified applications and the node begins processing data. If you add nodes to a running cluster, bootstrap actions run on those nodes also. You can create custom bootstrap actions and specify them when you create your cluster.

- Install the datadog agent on each node in the EMR cluster.

- Configure the datadog agent on master node to run spark check at regular intervals, and publish spark metrics.

I have created a gist for each of the two steps. The first script is launched by the bootstrap step during EMR launch, and downloads and installs the datadog agent on each node of the cluster. Simple! It then executes the second script as a background process.

Why do we need to run the configure as a second step?

Remember ☝that bootstrap actions are run before any application is installed on the EMR nodes. In the first step we installed a new software. The second step requires that YARN and Spark are pre-installed before datadog configuration can be completed.

yarn-site.xml does not exist at the time the datadog agent is installed. Hence we launch a background process to run the spark check setup script. It waits until yarn-site.xml is created, and contains the value for yarn property 'resourcemanager.hostname'. Once found, it proceeds to create the spark.yaml file, and moves it under /etc/dd-agent/conf.d. Then it sets the appropriate permissions on spark.yaml, and restarts the datadog agent. The agent's info subcommand runs the spark check. 🙆

Add Custom Bootstrap Actions

There are three ways to launch an EMR cluster, and bootstrap actions can be invoked via each of them. Refer to AWS Guide for invoking bootstrap actions while launching cluster from either AWS Console, or via AWS CLI. I have created a gist showing our specific bootstrap action script invocation while launching EMR cluster programatically.

Validation

Finally, to confirm that the bootstrap actions completed successfully, you can check the EMR logs in the S3 log directory you specified while launching the cluster. Bootstrap action logs can be found in path like <S3_BUCKET>/<emr_cluster_log_folder>/<emr_cluster_id>/node/<instance_id>/bootstrap-actions

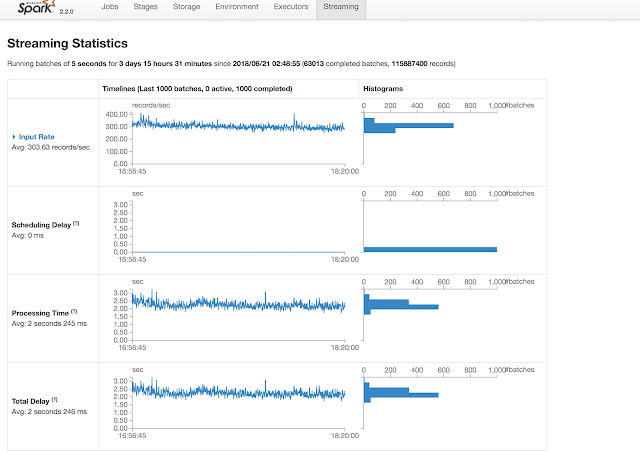

Within few minutes of deploying your spark streaming application on this cluster, you should also start receiving the spark metrics in datadog, as shown in the below screenshot:

One more way to validate would be to ssh into an EMR instance, and execute

sudo /etc/init.d/datadog-agent info

In the output, you should see spark check being run as follows:

Now that we have the datadog agent installed on the driver and executor nodes of the EMR cluster, we have done the groundwork to publish metrics from our application to datadog. In the next part of this series, I will demonstrate how to publish metrics from your application code.

If you have questions or suggestions, please leave a comment below.

Comments

Post a Comment